Recurrent layer

Presentation

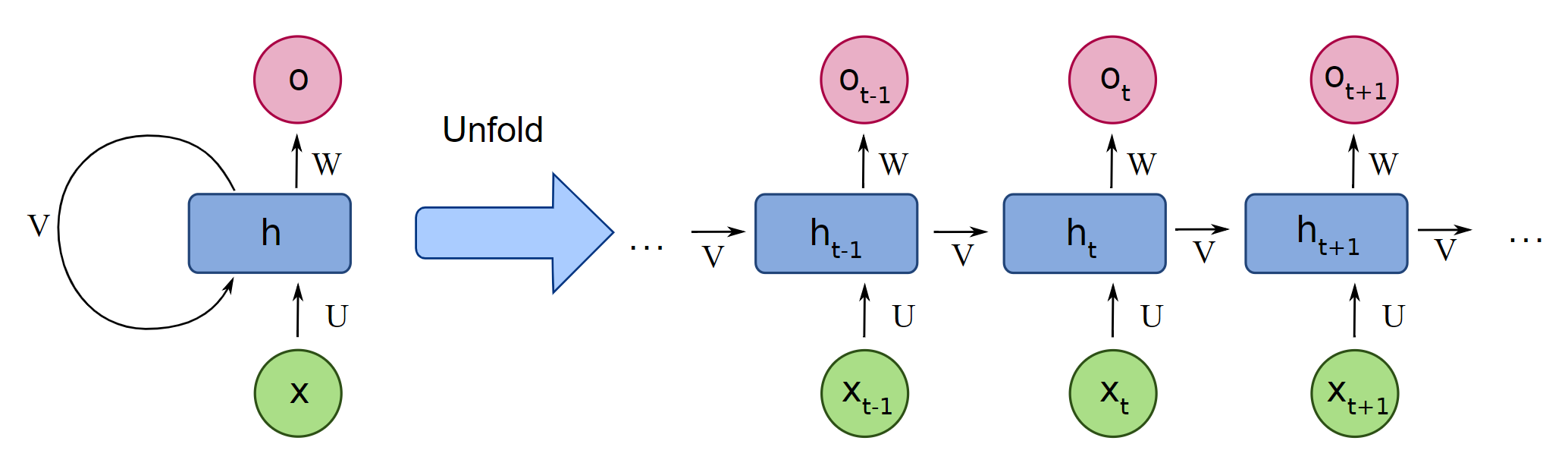

This layer is a simple fully connected layer with recurrence, where each neuron of the layer receive in additional input the output of this same neuron but at t-1. This allows it to exhibit temporal dynamic behavior. The RNNs can use this recurrence like a kind of memory to process temporal or sequential data.

Declaration

This is the function used to declare a Recurrent layer.

template <class ... TOptimizer>

LayerModel Recurrence(int numberOfNeurons, activation activation = activation::tanh, TOptimizer ... optimizers);

Arguments

- numberOfNeurons: The number of neurons in the layer.

- activation: The activation function of the neurons of the layer. It is recommended to use the tanh activation function.

Here is an example of neural networks using a GRU layer.

StraightforwardNeuralNetwork neuralNetwork({

Input(1),

Recurrence(12),

FullyConnected(6),

FullyConnected(1, activation::tanh)

});

See an example of GRU layer on dataset

Algorithms and References

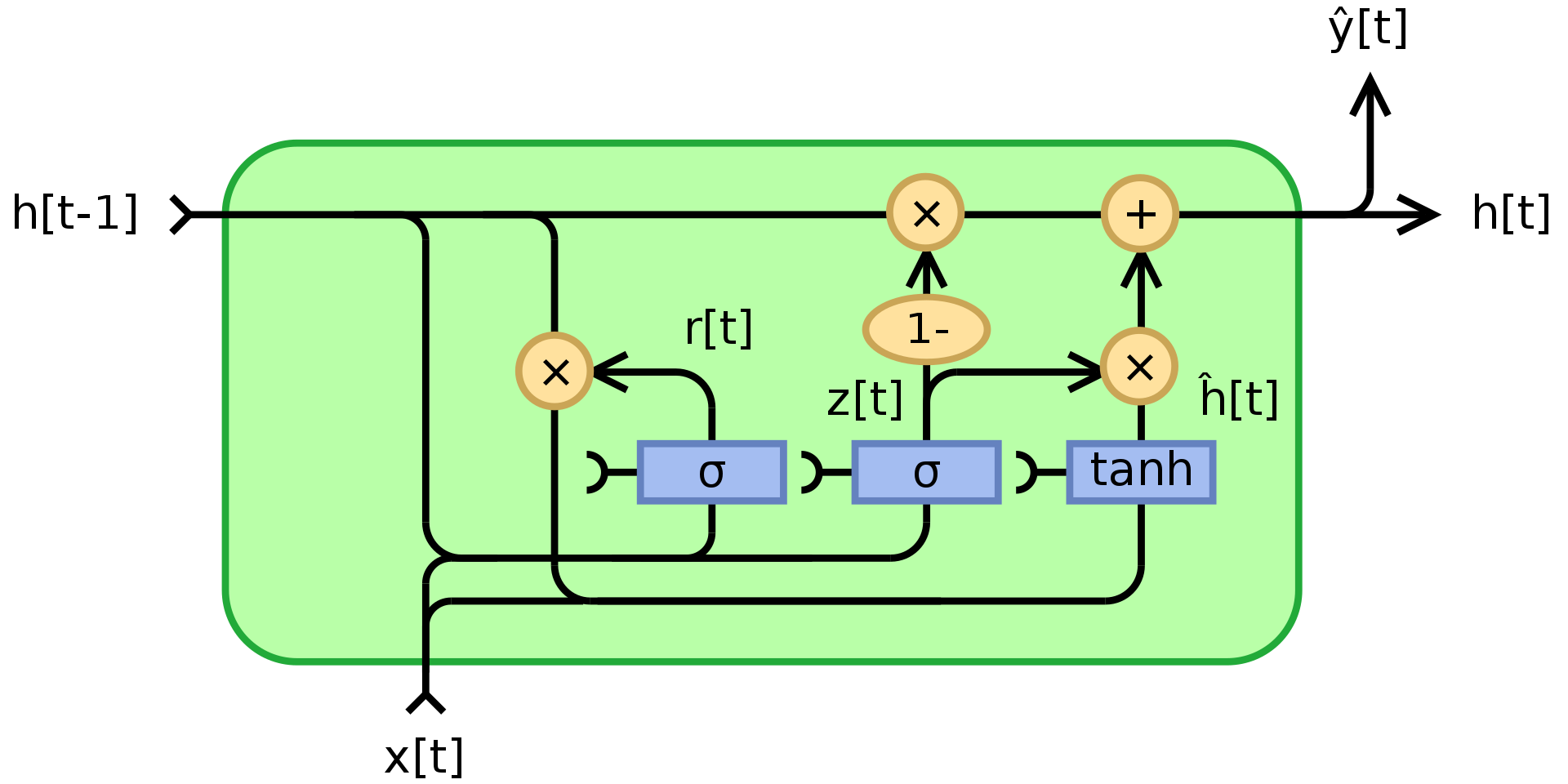

GRU implementation is based on Fully Gated Unit schema on Gated recurrent unit Wikipedia page. Also used Back propagation through time.